It's been a while since I posted, and I've been spending a lot of time fighting with Redis, one of the darling databases of the NoSQL era, at work, so I thought I'd grace y'all with a brief rant on Redis, what it's good at, what it's bad at, and so on.

What is Redis?

Redis is an open-source moderately-structured in-memory key-value store. This means that, unlike full relational databases, it doesn't have a fixed schema, and it can't perform server-side operations like joining and filtering data, and theoretically it's faster. Redis looks an awful lot like memcache, but has support for basic data structures (lists and hashes), and can theoretically write it's data to disk instead of just keeping it in memory.

For these reasons, I am wholly 100% in favor of using Redis, as long as you use it strictly as a memcache replacement, a temporary cache to make your application faster or back non-essential short-lived-data features like password resets. It can be safely(-ish) restarted thanks to its ability to persist to disk, and the data structures make it a lot easier to organize code (I have definitely seen memcache instances where people emulated lists by having a bunch of keys named "keyname_1", "keyname_2", etc. It was not good code.).

What is Redis not?

Redis is not a persistent database. It has disk persistence (which I will go into at great depth below), but it can never operate unless 100% of its data fits in memory. Contrast this to traditional relational databases (like PostgreSQL, MySQL, or Oracle), or to other key-value stores (like Cassandra or Riak), all of which can operate with only a "working set" of data resident in memory, and the rest on disk. Given that ECC-RAM is still about $15/GB and even the fastest SSDs are about $2.50/GB, this makes Redis a very expensive way to store your data. It makes sense if you're going to be careful to ensure that the only data in Redis is hot data (which you might if you're using it as a cache), but it absolutely does not make sense for long-term, sparsely-accessed data.

Redis is also not highly available. It has replication (which I will go into at great depth below), but only barely. It doesn't have any real clustering support (yes, I'm aware of WAIT and find it unsatisfactory), doesn't have any multi-master support, and just really seems to not want to be used in a highly-available fashion. If you want a key-value store that does that, I suggest you look into Riak.

Disk Persistence

Right off the gate, one of Redis's biggest wins over memcache is its ability to persist to disk. It has two mechanisms for doing this: "RDB" and "AOF". RDB takes a snapshot of everything in memory and periodically writes it to disk in a somewhat optimized format; AOF takes every single statement ever run against a redis instance and writes them to a single file which it periodically optimizes to remove duplicates. These both have some pretty serious limitations that make me not recommend either of them for production settings if you can possibly avoid it.

RDB

In order to write its full snapshot to disk, RDB forks the main redis process and does a bunch of work on it. Now, this is all well and good on Linux where copy-on-write memory means that that fork should be relatively free. However, the "copy" part of copy-on-write does kick in if you're on an active server. Quite often with a moderately-loaded server, you can build up several additional GB of memory usage during an RDB write. So you'd better make sure to leave lots of RAM free.

AOF

At first blush, the append-only-file (AOF) looks a lot like the binary write-ahead logs used for replication in standard relational databases (binlogs in MySQL, WALs in PostgreSQL). Basically, every time a command comes in, it's appended to the AOF log by redis. Then, if the server restarts, it can simply replay the entire log in order to get back to the state it was in before.

Unfortunately, AOF logs have what I consider a major problem: it's a single log of every statement since the beginning of time for the server. This means that if you have a lot of updates to existing keys happening (common when using Redis as a database), or a lot of keys expiring (common when using Redis as a cache), the AOF log will be many, many times the size of your database, and will take minutes to hours to replay — very bad if you're trying to recover from an outage.

Redis has a solution for this: BGREWRITEAOF. This causes redis to "optimize" the AOF, rewriting it to eliminate unnecessary updates and expired keys. Of course, since the single AOF log contains every statement since the birth of the database, this process takes unacceptably long on all but the smallest of databases, and tends to consume an inordinate amount of I/O.

The real problem with AOF is that there is no way to run it from a point-of-time which isn't the start of a server. You can't have an RDB at time x and then only keep AOF logs since x. There's no way to reasonably combine the efficiencies of RDB and AOF, despite the fact that every other database system has supported this behavior for decades.

Replication

Redis has asynchronous replication. If you run any kind of large product, that should warm your heart a bit — replication is the best way to build high availability and failure-tolerant systems. Unfortunately, Redis's replication is probably the most naive, useless form of asynchronous replication I've used — I guess it falls somewhere between "sqlite on an nfs share" and "postgresql 8.x".

Replication is naive

Redis implements replication by first issuing a SYNC command when a slave connects (which just does an RDB save and copies it over a TCP socket), and then streaming the AOF file. That's all. If a node loses network connection for a while, it has to copy the whole database again, which is almost never tolerable with non-trivially-small databases and multiple datacenters. Redis 2.8 attempts to improve this with the PSYNC command, which is the most naive thing — you just specify a buffer time of some number of seconds, and if slaves disconnect for less than that time, they'll replay out of the buffer instead of re-downloading the whole database. Oh, Equinix needs to perform power maintenance and one of your datacenters is going to be offline for a couple of hours? Too bad, I guess you'll need to entire buffer several hours of data in memory at all times on your master, or you'll have to retransfer all of your redis databases across the network. You should just live in the magical fairyland where nothing ever breaks!

Replication is un-monitorable

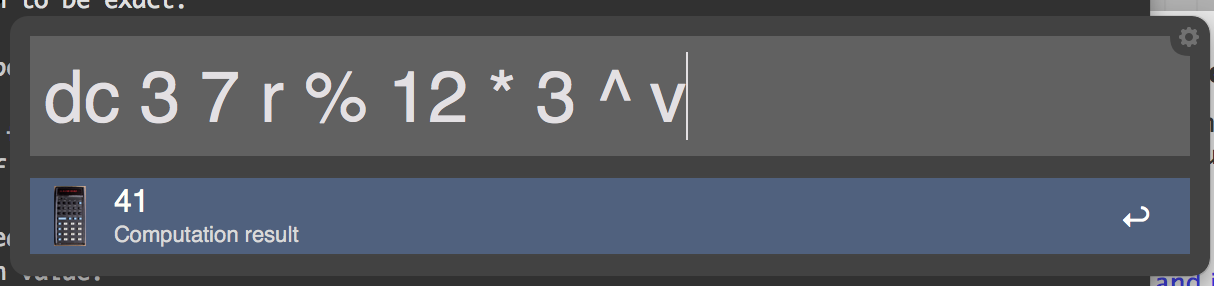

Would you like to know how far behind your slaves are? Well, you'd better implement your own heartbeating, because Redis doesn't expose that. The manual helpfully suggests that you can determine whether a slave is caught up to the master by seeing if the number of keys is the same on both. Because there are no operations in redis-land which can change data without changing the number of keys. There are a couple of replication-related fields in INFO, but they don't actually help if you're trying to figure out exact status of a cluster:

master_link_status seems to say "up" all the time, even when it isn'tmaster_last_io_seconds_ago is relatively useless data, doesn't differentiate between replication issues and master not doing anything (and only has 1s granularity, which is useless)

That's it. Nothing else.

Replication is one-direction

Something I would absolutely love to have in Redis is master-master replication. Imagine if you could set up two servers and write to either of them and have them become eventually-consistent. It would be like some kind of key-value nirvana! MySQL has supported this feature for 14 years (since MySQL 3.23). Unfortunately, Redis doesn't have any support for this. An instance can be a master or a slave, but never both. And there's no reconciliation in the replication code anyway. Hm, maybe this belonged in the "Replication is naive" section...

High Availability

Well, Redis is so simple, at least it should be easy to make highly-available, right?

C'mon, you know the answer to that.

Of course it isn't.

As discussed above, you can't have a cluster of eventually-consistent Redisen. That right there rules out the HA strategy commonly employed by key-value stores of just having a lot of them.

Well, at least we could have a single master and a bunch of read slaves, and then promote one quickly to be master, right? No, wrong. Since there is no exposure of replication coordinates by Redis, there's no way to know which of the read slaves has the latest data, so there's no way to know which on to promote.

Well, okay, at least you can use a sharding redis proxy like twitter's twemproxy to distribute your data to lots of redis masters, and if one of them goes down, you only lose 1/n of your keys, right? Well, sort of. Twemproxy fails to support an absolutely stupefying 42 of redis's 98 commands. Some of these make sense, but the fact that twemproxy kills your connection if you issue a PING is just madness (and, in fact, is ticketed). Twemproxy specifically has other issues; my favorite is issue #6, which is that you can't change the twemproxy config file without downtime (which I've built a horrible/hilarious workaround for at Uber). Real A-class software in this Redis community

Redis has an unstable tool called sentinel which is supposed to fix some of these issues by managing slaving across a redis cluster for you. As far as I can tell in my limited experimentation, all it can do is detect some kinds of redis failures and change what the master is, at the cost of running yet another implementation of byzantine agreement. Of course, it still requires that you run either nonexistent sentinel-aware clients (which add a bunch of new, exciting failure modes to your application), or that you manage failover out-of-band using keepalived or carp. Which seems to sort of completely invalidate the point of having an application to manage clusters for you.

Other Gotchas

Redis has a parameter called maxmemory in its config file. Do you know what redis will do by default when it hits maxmemory? Absolutely nothing if you're using redis as a database. The default behavior is volatile-lru, which means that when it hits maxmemory, redis will examine keys with an expiration time (keys set with SETX) and LRU out some of them. It won't look at any non-expiring keys (which most of yours will be if you're using redis as a database), it won't consider objects by size, and it won't raise any errors. There are two sane choices for the maxmemory-policy option:

allkeys-lru: Redis will, using its lossy LRU algorithm, choose to delete a key from your entire keyspace to delete when it gets towards the upper boundaries of memorynoeviction: Redis will return a "too much memory" error on writes. WHY GOD WHY IS THIS NOT THE DEFAULT!?

Redis gets hella fragmented. Unlike traditional databases which know how big a tuple/row is and can allocate memory reasonably, redis has to rely on similar slab allocation algorithms to memcached. This means that over regular use, it will get highly fragmented, and while it may only have 1GB of data in it, it might be taking up 10GB of RAM (which means 20GB of RAM when you're RDBing). This data is exposed in INFO, thankfully. Unfortunately, redis has no internal "cleanup" routines to reduce fragmentation — your only option is to take an RDB dump and then restart redis. Doing this because of an on-call page this morning, I freed up about 10GB of RAM on one of our clusters. It's sort of shameful that you have to be aware of this and willing to re-jigger your replication topology every few weeks just to prevent death by fragmentation, but what else do you expect?

We had an awesome issue at work because some user accidentally issued the command FLUSHALL to a production redis box. Why did a regular consumer have the ability to do that? Because Redis doesn't have any concept of permissions. The solution was to uses redis's (non-runtime-alterable) RENAME-COMMAND operation to rename the FLUSHALL command to something that the client wouldn't know about. That's somewhat like doing mv /bin/rm /bin/nobody-will-ever-guess-this-name as a way to fix the security of your Unix box where everyone has root.

Redis is inconsistent. The pidfile parameter take a full path, but the dumpfile parameter takes a relative path based on the dir parameter. The parameter for the AOF filename (appendfilename) does not appear anywhere in the documentation. You have to read config.c yourself to know what it is.

Redis is stupid. It traps SIGSEGV and overrides it to write an error to the log and longjmp back to where it was. I have no other words for that behavior.

Wrapping up

Obviously this is a lot of gripes. I want to emphasize that if you use redis as intended (as a slightly-persistent, not-HA cacache), it's great. Unfortunately, more and more shops seem to be thinking that Redis is a full-service database and, as someone who's had to spend an inordinate amount of time maintaining such a setup, it's not. If you're writing software and you're thinking "hey, it would be easy to just put a SET key value in this code and be done," please reconsider. There are lots of great products out there that are better for the overwhelming majority of use cases.

Well, that was cathartic. I look forward to your response flames, Internet.